ANIME – MANGA

View All

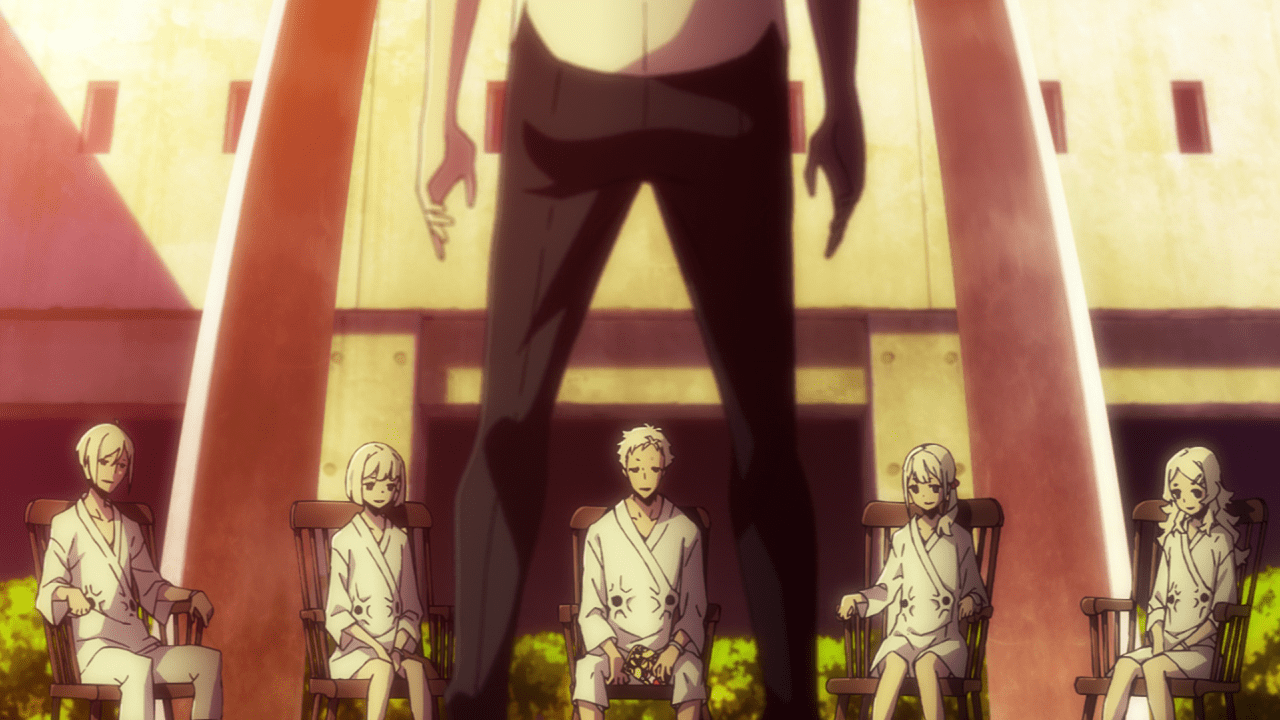

Vinland Saga Season 2 Tập 9: Nơi tất cả chiến binh hội tụ

TRONG Vinland Saga Phần 2 Tập 9, Thorfinn cuối cùng có thể đã có một bước đột phá về tinh thần và cảm xúc sau khi trải qua nhiều cơn …

ĐỜI SỐNG

View All

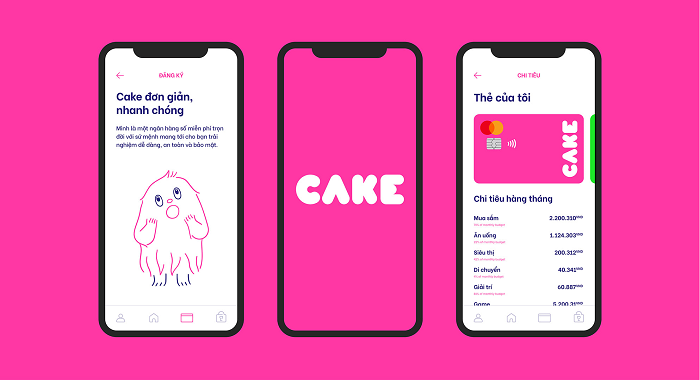

Tỷ lệ nợ trên thu nhập có ảnh hướng tới khoản vay của bạn không?

Điểm nổi bật: Với sự phổ biến ngày càng tăng của các khoản vay cá nhân, điều cần thiết là phải biết tỷ lệ nợ trên thu nhập của bạn ảnh hưởng đến các khoản vay cá nhân …

GIẢI TRÍ

View All

5 Cuốn Truyện Ngắn Hay Nên Đọc Ít Nhất Một Lần Trong Đời

Bạn đang ngao ngán các bộ truyện dài tập hay bạn không có thời gian đọc những cuốn truyện dài. Nếu vậy, bạn nên đọc những cuốn truyện ngắn hay …